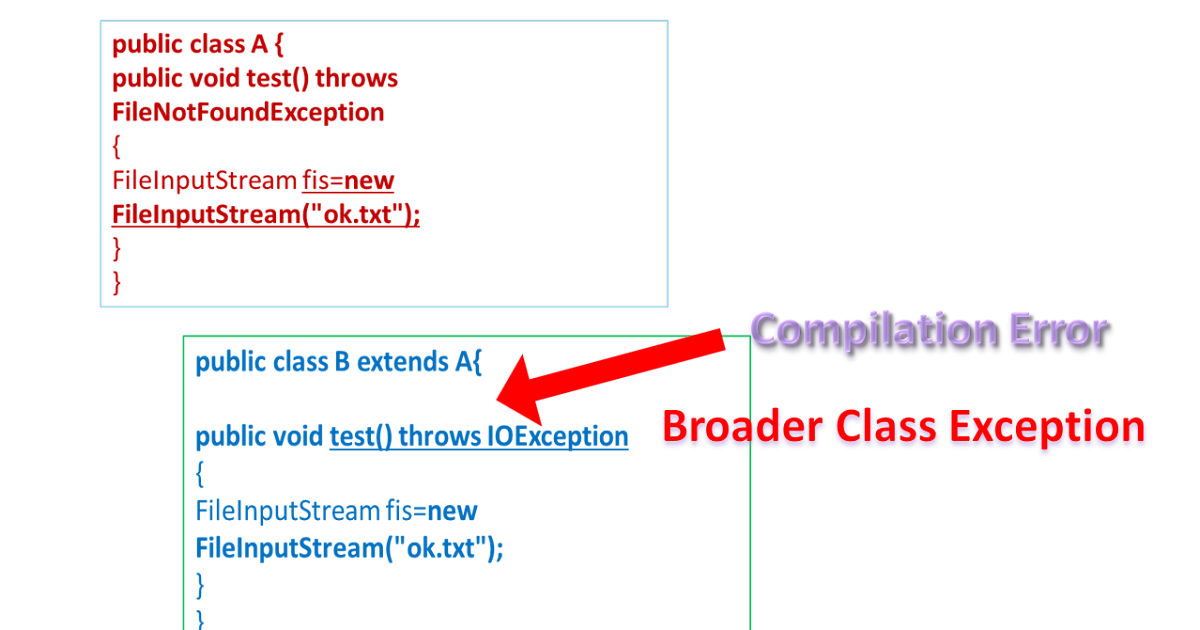

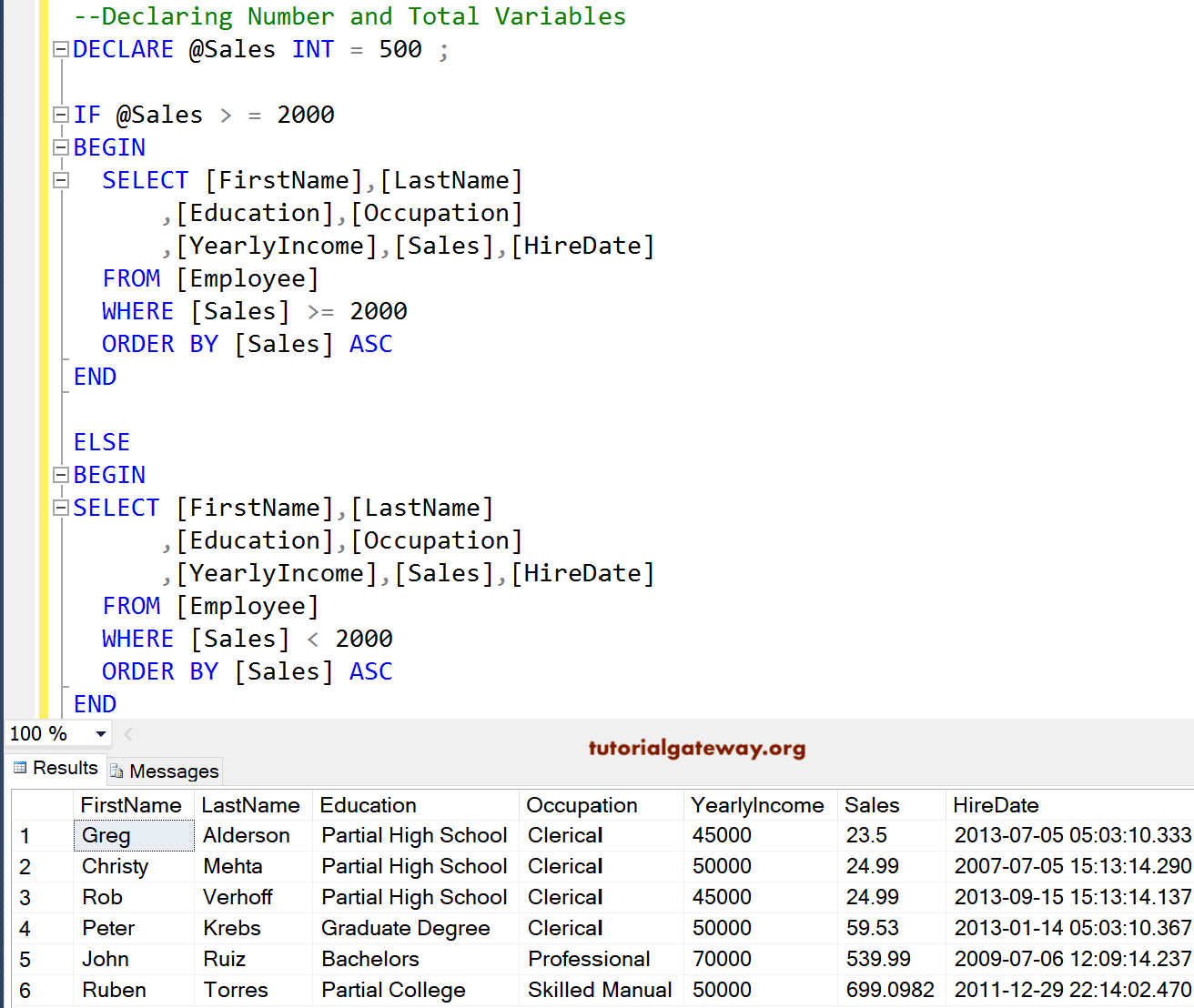

Oracle identifies direct path IO to temporary segments by way of the wait occasions direct path learn temp and direct path write temp. DATA FILE WRITE IO By default, DML operations, INSERT, UPDATE, DELETE, and MERGE, modify blocks of data in the buffer cache. The soiled blocks are written to disk by the Database Writer course of at some later time. Should the DBWR fail to keep up with the modifications, free buffer waits might outcome; we discussed these in Chapter 17. Only if asynchronous IO is enabled will the DBWR be capable of maintain the buffer cache "clean" in DML-intensive environments. However, the interpretation of this wait occasion could be tough because the asynchronous IO mechanism leads to many IO operations continuing without the DBWR actively waiting. The different frequent direct path write state of affairs is when a session performs a direct path append insert. Direct path write operations are seen as direct path write waits. REDO LOG IO Redo logs record transaction data enough to recover the database if a database failure occurs. When a session points a DML assertion, it makes entries to a redo log buffer in the SGA. For the transaction to be recovered if a failure happens, these redo log entries have to be written to disk when the transaction commits. However, you'll find a way to configure Oracle to defer or batch these writes using COMMIT_WAIT and COMMIT_LOGGING parameters or the COMMIT_WRITE parameter . Disk search time can, subsequently, be very low for redo log write operations. Free buffer waits occur when a session wants to introduce a new block into the buffer cache, however all blocks are modified . This occurs when the database author can't write the blocks out to disk as quick as they are being modified. Optimizing information file IO is usually indicated, notably asynchronous and filesystem direct IO. Increasing the dimensions of the buffer cache may also relieve the contention if the DBWR is falling behind only sporadically. Direct path readand-write operations bypass the buffer cache and will not endure from, or contribute to, free buffer waits. The recovery author process writes earlier than image records of changed blocks to the flashback logs when the database is operating in flashback database mode. Flashback buffer waits—flashback buf free by RVWR—occur if the small flashback buffer cannot be cleared fast enough.

Ensuring the flashback logs are on quick devoted units may help. Increasing the scale of the flashback buffer is an unsupported process that might, nevertheless, cut back flashback waits. Buffer busy waits happen when there is rivalry for rows in the same block. Splitting rows across multiple blocks would possibly relieve the contention. Possible options embody partitioning, hash clustering, or setting excessive values for PCTFREE. The redo log buffer is used to build up redo log entries that can finally be written to the redo log. Sessions experience log buffer area waits in the occasion that they want to generate redo but the buffer is full. Asides from optimizing redo log IO, you possibly can explore the use of unlogged and direct path inserts that generate reduced redo entries. Increasing the dimensions of the log buffer can relieve short-term log buffer space waits. The rows returned are locked because of the FOR UPDATE clause. An operation that retrieves rows from a desk operate (that is, FROM TABLE() ). An access to DUAL that avoids studying from the buffer cache. Rows from a outcome set not matching a variety criterion are eliminated. An external database is accessed by way of a database link. An Oracle sequence generator is used to acquire a unique sequence number. Perform the next operation as soon as for every worth in an IN list. Denotes a direct path INSERT based on a SELECT statement. Internal operation to support an analytic function corresponding to OVER(). An analytic perform requires a sort to implement the RANK() perform. Direct path inserts, as described in Chapter 14, "DML Tuning," bypass the buffer cache and so don't contribute to free buffer waits.

Direct path disk reads are normally utilized when Oracle performs parallel question and in Oracle 11g might also be used when performing serial full desk scans. Because blocks learn on this manner are not introduced to the buffer cache, they gained't contribute to, or undergo from, free buffer waits. See Chapter 18 for extra information on direct path IO. The functionality of the DBWR to put in writing blocks to the datafiles is finally restricted by the speed and the bandwidth of the disks that help these datafiles. Providing datafiles are properly striped, including disks to the volume might increase bandwidth; see Chapter 21, "Disk IO Tuning Fundamentals," for a more detailed discussion. RAID 5 and related parity-based redundancy schemes impose a heavy penalty on IO write instances. Imposing RAID 5 or similar on Oracle information files is a perfect recipe for creating free buffer wait contention. Increasing the buffer cache dimension can scale back free buffer waits by enabling the DBWR extra time to catch up between IO write peaks. Figure 17-4 illustrates the discount in free buffer waits when the related database cache was increased from 50M to 400M. A latch is similar to a lock, but on shared memory somewhat than on desk data. Latch contention is discussed intimately in Chapter 16, "Latch and Mutex Contention." The server is ready for a mutex adding or modifying a cached SQL within the shared pool. Waiting for a lock on the cached copies of the data dictionary tables. A block in the buffer cache is being accessed by one other session. See Chapter 17, "Shared Memory Contention," for more details.

Waiting for a log file to change, perhaps because the log isn't archived or checkpointed. See Chapter 21, "Disk IO Tuning Fundamentals," for extra details. Waiting for a block to be written to disk by the DBWR. This normally happens when reading from certain V$ tables or when performing system operations. Single block writes to the datafiles, presumably writing the file header. Writes that have been made directly to the momentary datafile, bypassing the buffer cache and never involving the DBWR. Reads from the short-term tablespace that bypass the buffer cache. See Chapter 19, "Optimizing PGA Memory," and Chapter 21 for more particulars. Waiting for an additional session to read knowledge into the buffer cache. An operation in which a quantity of blocks are read into the buffer cache in a single operation.

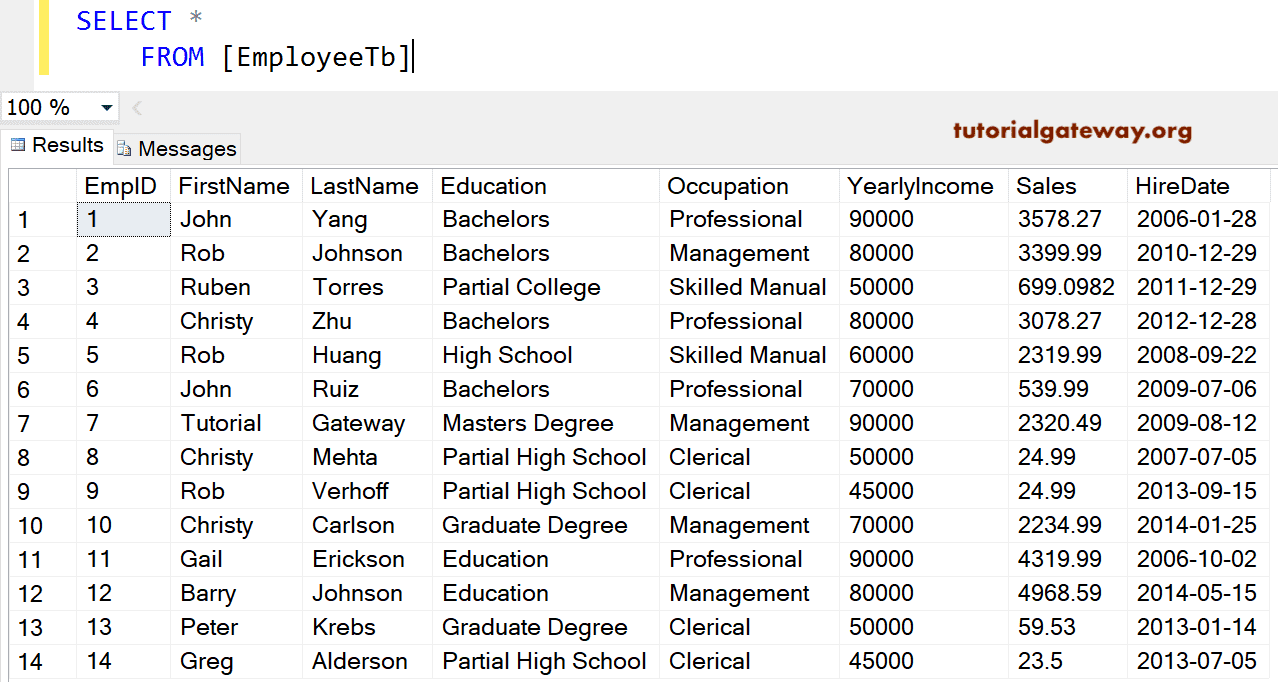

Reading from disk as part of a full desk scan or other multiblock learn. Reading a single block from disk, usually as a result of an indexed-read. Heap organized table—This is the default table type; when you use CREATE TABLE with none particular options, you discover yourself with a heap desk. The term heap signifies that rows might be saved in no specific order. Every row in a heap desk is recognized by a ROWID, which can be utilized to locate the row on disk. Hash clustered table—This is a table during which the physical location of a row is set by the first key. This allows a row to be retrieved rapidly by way of the first key without requiring an index lookup. A sorted hash cluster is a variation during which rows for a particular hash value are retrieved in a selected sort sequence. Index organized table—This is structured like a B-tree index by which the "leaf" block accommodates the row itself rather than—as in an actual B-tree index—a pointer to the row. Index cluster—The index cluster shops multiple tables in the identical phase, with rows that share a common key saved collectively. They are recognized by object REFs quite than main key and can have extra complicated inner structure than a normal table. Nested table—This is an object type that has the traits of a relational desk and that might be "nested" inside a column of a heap desk. Each master row within the table can have element rows stored within the nested desk column. External tables—These are tables that map to recordsdata stored exterior the database. They are most usually used for accessing files that need to be loaded into the database without the intermediate step of loading right into a staging desk. Temporary tables—A temporary desk could be explicitly or implicitly created to store data that will not persist past the current session or transaction. Buffered datafile IO—db file sequential learn and db file scattered learn happen when a session reads blocks of information into the buffer cache from a knowledge file.

Increasing the scale of the buffer cache could be successful in decreasing this kind of IO. This kind of IO may be lowered by altering PGA reminiscence configuration. Direct path reads—Other than those from momentary segments—occur when Oracle bypasses the buffer cache. This type of IO is generally unaffected by reminiscence configuration. System IO—Such as writes to redo logs and database files, are carried out by Oracle background processes and customarily aren't instantly affected by memory optimization. Oracle maintains other caches within the SGA as properly, such as the redo log buffer, which buffers IO to the redo log recordsdata. Chapter 2, "Oracle Architecture and Concepts," supplies a evaluation of the elements and structure of the SGA. The sharing of memory creates the potential for rivalry and requires that Oracle serialize—restrict concurrent access—to some areas of shared reminiscence to forestall corruption. If two steps have the identical mother or father, the step with the bottom place might be executed first. The type of operation being carried out; for instance, TABLE ACCESS or SORT. For instance, within the case of TABLE SCAN, the choice could be FULL or BY ROWID. If it is a distributed query, this column indicates the database link used to reference the item. For a parallel query, it'd nominate a temporary result set. Optimizer objective in impact when the statement was defined. For a distributed query, this might contain the text of the SQL sent to the remote database. For a parallel question, it signifies the SQL statement executed by the parallel slave processes. Contains additional other data in an XML doc. This contains version info, SQL Profile or outlines used, dynamic sampling, and plan hash value. This can denote whether the step is being executed remotely in a distributed SQL assertion or the nature of parallel execution.

The relative value of the operation as estimated by the optimizer. The variety of rows that the optimizer expects might be returned by the step. The number of bytes anticipated to be returned by the step. If partition elimination is to be performed, this column signifies the start of the range of partitions that will be accessed. It may additionally contain the key phrases KEY or ROW LOCATION, which signifies that the partitions to be accessed will be determined at run time. Indicates the tip of the vary of partitions to be accessed. This column lists the execution plan ID for the execution plan step that determined the partitions identified by PARTITION_START and PARTITION_END. This column describes how rows from one set of parallel question slaves—the "producers"—are allotted the subsequent "consumer" slaves. Possible values are PARTITION , PARTITION , HASH, RANGE, ROUND-ROBIN, BROADCAST, QC , and QC . These choices are discussed further in Chapter thirteen, "Parallel SQL." Estimated CPU value of the operation. Chapter 17 DBWR as a result of otherwise it'll nearly actually fall behind when a quantity of person sessions modify blocks concurrently. When datafiles are on filesystems—as against Automatic Storage Management or uncooked devices—asynchronous IO is controlled by the parameter FILESYSTEMIO_OPTIONS. In addition, filesystems normally have their very own buffer cache that reduces disk IO in a similar way to Oracle's buffer cache. Sometimes the mix of Oracle's buffer cache and the filesystem buffer cache can work in your favor. However, for the DBWR the filesystem buffer cache simply gets in the way because the DBWR has to put in writing via the cache to ensure that the IO makes it to disk. Filesystem direct IO allows the DBWR and different Oracle processes to bypass the filesystem buffer cache. Both asynchronous IO and filesystem direct IO have a tendency to assist scale back free buffer waits. There's no real disadvantage to asynchronous IO, but filesystem direct IO might need the effect of increasing disk read waits. This is because the filesystem buffer cache generally reduces IO learn times by maintaining some filesystem blocks in memory. Figure 17-3 exhibits how the assorted settings of FILESYSTEMIO_OPTIONS affected the free buffer wait occasions experienced by our earlier example.

Both asynchronous IO and filesystem direct IO were efficient in reducing free buffer waits. However observe that enabling filesystem direct IO lowered free buffer waits but also elevated data file learn time. In this instance the web effect was constructive; in other conditions the increase in disk learn time may be more expensive than the reduction in free buffer waits. To enable asynchronous IO, you should make certain that the worth of DISK_ASYNCH_IO is about to TRUE and the value of FILESYSTEMIO_OPTIONS is about to ASYNCH or SETALL. To allow filesystem direct IO, the worth of FILESYSTEMIO_OPTIONS ought to be set to DIRECTIO or SETALL. Listener—One or more listener processes will be energetic on every host that incorporates a database server. The listener accepts requests for connection and, within the case of a devoted server connection, creates a server process to handle that connection. In the case of a shared server connection, the listener passes the request to a dispatcher process that mediates between periods and shared servers. Database author —Server processes learn from database files, however more typically than not it's the Database Writer process that writes changes to these recordsdata. Instead of writing changes out instantly, it writes the changes at some convenient later time. As a result, database periods do not usually want to attend for writes to disk, although there are "direct" IO modes during which periods will write on to the database recordsdata. The LGWR writes these entries periodically and almost all the time when a COMMIT assertion is issued. In certain circumstances these writes could be asynchronous or batched. (See Chapter 14 for more particulars.) Log Archiver —The Log Archiver copies modified redo logs to archived logs that recuperate the database if a disk failure occurs. Recovery writer —The Recovery author writes "undo" information, much like rollback segment info, to the flashback log information. Data is returned to the user on account of executing SQL statements corresponding to query expressions or function calls. All statements are compiled previous to execution and the return type of the info is understood after compilation and before execution. Therefore, once a statement is ready, the info kind of every column of the returned result's recognized, together with any precision or scale property. The kind does not change when the identical question that returned one row, returns many rows because of adding more data to the tables. SQL Standard compliance is the most unique attribute of HyperSQL.

HyperSQL can provide database entry inside the person's software course of, within an application server, or as a separate server process. HyperSQL can run completely in reminiscence using a fast reminiscence construction. HyperSQL can use disk persistence in a versatile means, with reliable crash-recovery. HyperSQL is the only open-source relational database administration system with a high-performance devoted lob storage system, suitable for gigabytes of lob information. It is also the one relational database that can create and access large comma delimited information as SQL tables. HyperSQL supports three live switchable transaction control fashions, including totally multi-threaded MVCC, and is appropriate for prime performance transaction processing purposes. HyperSQL can be appropriate for enterprise intelligence, ETL and different purposes that course of giant information units. HyperSQL has a variety of enterprise deployment options, corresponding to XA transactions, connection pooling data sources and distant authentication. BUFFER CACHE TUNING In the preceding chapters, we mentioned tips on how to cut back the demand positioned on the Oracle database through software design and SQL tuning. We then proceeded to eliminate competition preventing Oracle from processing that demand. Our goal has been to minimize the logical IO demand that's sent from the applying to Oracle and take away any obstacles that block that demand. If we've accomplished a good job thus far, we've achieved a logical IO rate that's at a practical degree for the duties that the applying must perform. Now it's time to attempt to prevent as much as attainable of that logical IO from turning into physical IO. In this chapter we look at how to optimize buffer cache memory to cut back disk IO for datafile IO operations. The buffer cache exists primarily to cache regularly accessed data blocks in reminiscence in order that the applying doesn't must read them from disk. Buffer cache tuning is, due to this fact, critical in preventing logical IO from turning into bodily IO.

In each release of Oracle since 9i, Oracle has increasingly automated the allocation of reminiscence to the varied caches and pools. In 10g, Oracle launched 1 A uncooked memory entry is well 1,000s of occasions quicker than a disk learn. However, because of the complicated nature of Oracle memory accesses, issues like latches and mutexes, a logical learn might "only" be 100s of occasions sooner. SUMMARY Every server course of maintains an area in memory known as the PGA that is used as a piece space for short-term result units and to type and hash information. When these work area operations can not complete in memory, Oracle must write to and read from short-term segments, leading to vital IO overhead. The total amount of PGA memory out there for all periods is normally managed by the parameter PGA_AGGREGATE_TARGET. The worth is actually a "target" and typically sessions must exceed the setting. Furthermore, individual sessions can not use all the PGA Aggregate Target; usually only 20 % shall be available to a single session. The total overhead of short-term disk IO could be measured by observing the occasions spent in direct path read temp and direct path write temp wait events. The larger these waits are compared to complete energetic time, the upper our incentive to reduce momentary phase IO, probably by rising PGA Aggregate Target. The effect of adjusting the PGA Aggregate Target may be accurately estimated utilizing the recommendation in V$PGA_TARGET_ADVICE. This view exhibits the change in the amount of IO that might have occurred up to now had the PGA Aggregate Target been set to a unique dimension.